On February 26 this year KFENCE was merged into the upstream kernel source, ready for release in Linux 5.12. KFENCE is basically a low-overhead address sanitizer, but actually so low-overhead that it can run in production kernels on live systems whereas the traditional kernel address sanitizer (KASAN) so far was only used in testing and fuzzing setups. In this post I will outline how it works and how it differs from the kernel address sanitizer.

Kernel Address Sanitizer

First of all, let’s get back to address sanitizers. Generic KASAN as implemented in the Linux kernel uses a similar approach to userspace ASANs as implemented in gcc and clang. A ‘shadow region’ of memory is allocated where, for each possible memory address, a flag is stored which indicates if a memory address is safe to access. As one bit is used per address, this uses an amount of memory equal to 12.5% of the total kernel address space.

When a memory address is accessed for read or write, the shadow memory is first checked to see if the address is valid. If not, it means memory is being accessed in an invalid manner, i.e. there is a buffer overflow or a use-after-free condition and an error report is generated.

If we were able to do these checks constantly then of course a large amount of the security issues we deal with due to the use of memory unsafe languages would be history. However, validating every single memory access comes with a huge added performance overhead that is unacceptable in production kernels.

KFENCE

Enter KFENCE. Although KFENCE is designed for the same purpose and detects some of the same errors, the way it works and the amount of errors it will catch is quite different. Most importantly, it is very prone to not spotting some errors which I will explain below.

KFENCE will detect out-of-bound writes, out-of-bounds reads (with some limitations) and use-after-free.

The inner workings

When enabled, on boot KFENCE sets up an object pool of a fixed size (configurable using CONFIG_KFENCE_NUM_OBJECTS, the default is 255).

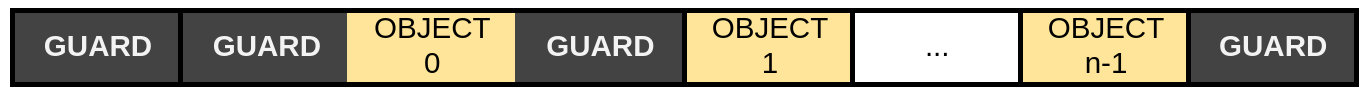

The object pool consists of two pages per object - one to contain the object and one adjacent guard page. Two additional guard pages are allocated at the beginning of the pool to protect the first object (two pages rather than one to have an even number of pages).

The resulting object pool looks like this:

Every object in the pool is added to a freelist.

Object allocation

KFENCE hooks into the SLAB/SLUB allocator (the SLOB allocator is not supported) but only takes an allocation once on an interval basis. The interval is configurable (in milliseconds) through CONFIG_KFENCE_SAMPLE_INTERVAL or the kfence.sample_interval command line parameter. All other allocations are still handled by the SLAB/SLUB allocator as usual and will not be monitored.

When KFENCE takes the allocation, it looks for a free object pool slot in the freelist. If it doesn’t find any, it lets the SLAB/SLUB allocator handle the allocation.

If a free slot is found, it rolls a 2-sided dice to determine whether it puts the object all the way at the beginning or all the way at the end of the object page. Of course the size of the object is likely to be smaller than the page size; so if the object is placed at the beginning of the page the guard page would catch invalid access below the object, if it is placed at the end of the page it would catch invalid accesses beyond the object. The unused space in the object page is still guarded to a lesser extent. KFENCE calls this area the red zone. This area is filled with a canary value.

The following illustrates the pool layout in more detail (borders are page boundaries):

During the objects lifetime

While the object is alive, any reads/writes to the object itself proceeds as normal as with any normally allocated object. There are no hooks on memory access so this is as fast as any normal memory access.

Read/write accesses outside the bounds of the object will be detected if the access lands in a guard page. When that happens, KFENCE handles the page fault, reports the condition and then removes the restrictions from the guard page so the faulty code can execute (wrongly) as normal.

An out-of-bounds read that lands in the red zone will not be detected, but an out-of-bounds write may be detected on free, see below.

Freeing the object

When the object is freed, the red zone in the object page is checked. If any of the canary values are modified, an out-of-bounds write occured in the red zone during the objects lifetime and an error report is also generated. Of course this error report is not very specific - in this case we can only detect that the invalid write happened, but not at which point in the execution of the kernel it happened.

The object page is then marked protected, so any access would trigger a page fault and a use-after-free error report is generated.

The freed page is added at the tail of the freelist, to detect use-after-frees in the freed page for as much time as possible.

Error reporting

When any of these errors occur, some effort is made to make the kernel execute as ‘normal’ as possible, i.e. by unprotecting the guard page in case an access occurs there.

An error report from KFENCE is written to the dmesg ring buffer and looks like this (from the docs):

==================================================================

BUG: KFENCE: memory corruption in test_kmalloc_aligned_oob_write+0xef/0x184

Corrupted memory at 0xffffffffb6797ff9 [ 0xac . . . . . . ] (in kfence-#69):

test_kmalloc_aligned_oob_write+0xef/0x184

kunit_try_run_case+0x51/0x85

kunit_generic_run_threadfn_adapter+0x16/0x30

kthread+0x137/0x160

ret_from_fork+0x22/0x30

kfence-#69 [0xffffffffb6797fb0-0xffffffffb6797ff8, size=73, cache=kmalloc-96] allocated by task 507:

test_alloc+0xf3/0x25b

test_kmalloc_aligned_oob_write+0x57/0x184

kunit_try_run_case+0x51/0x85

kunit_generic_run_threadfn_adapter+0x16/0x30

kthread+0x137/0x160

ret_from_fork+0x22/0x30

CPU: 4 PID: 120 Comm: kunit_try_catch Tainted: G W 5.8.0-rc6+ #7

Hardware name: QEMU Standard PC (i440FX + PIIX, 1996), BIOS 1.13.0-1 04/01/2014

==================================================================

Kernel pointers are by default obfuscated to avoid the reports being used to leak information about the kernel base address (leading to a KASLR bypass).

Purpose

As we’ve seen, because of the limited object pool and infrequent allocations, the chances of KFENCE catching a memory error are actually quite low - a lot of stars need to align for an error to be detected. However, all of this comes with practically no overhead.

KASAN has a much, much higher chance of catching errors as it monitors ALL memory access, but is practically unusable on production kernels because of the performance impact. For this reason, KASAN is never used on production systems but mostly on development systems, during automated tests and on fuzzing setups like syzbot. These are all quite synthetic workloads and mostly run on virtual machines, so they touch only a small set of drivers.

KFENCE can, due to its low overhead, run on any Linux system; desktops, servers, embedded devices, Android smartphones, etc which see real-world workloads and use a large variety of drivers and configurations. It is also non-intrusive, it only observes and reports and makes no attempts to prevent any harm by stopping execution - something Linus Torvalds has always been quite vocal about.

The sole purpose is simple - error reports from real-world systems running real workloads. The more distributions enable this and preferably add it to their error reporting systems (such as Ubuntu’s apport), the more systems will run with KFENCE enabled and therefore the more chances an error will be caught.

Of course this is not ment to replace development testing or fuzzing but is ment to complement them to have a greater memory error detection coverage of the kernel source code.

Any effects on kernel exploitation?

In theory, a buffer overflow exploit executed on a KFENCE object might be detected and miss its intended overwrite target as the vulnerable object is located in the KFENCE pool. This is in no way a defense - an attacker can trivially bypass KFENCE by simply executing a no-op allocation or depleting the KFENCE object pool first. Memory exploits often already require precisely setting up the heap to be successful, so this is a very minor obstacle to take care of.

An effective mechanism against memory exploits is randomizing allocations.

References

- KASAN documentation: https://github.com/torvalds/linux/blob/master/Documentation/dev-tools/kasan.rst

- KFENCE documentation: https://github.com/torvalds/linux/blob/master/Documentation/dev-tools/kfence.rst